Memory is as Important as Intelligence

Published 2025-09-15 by Mattia • 5 min read

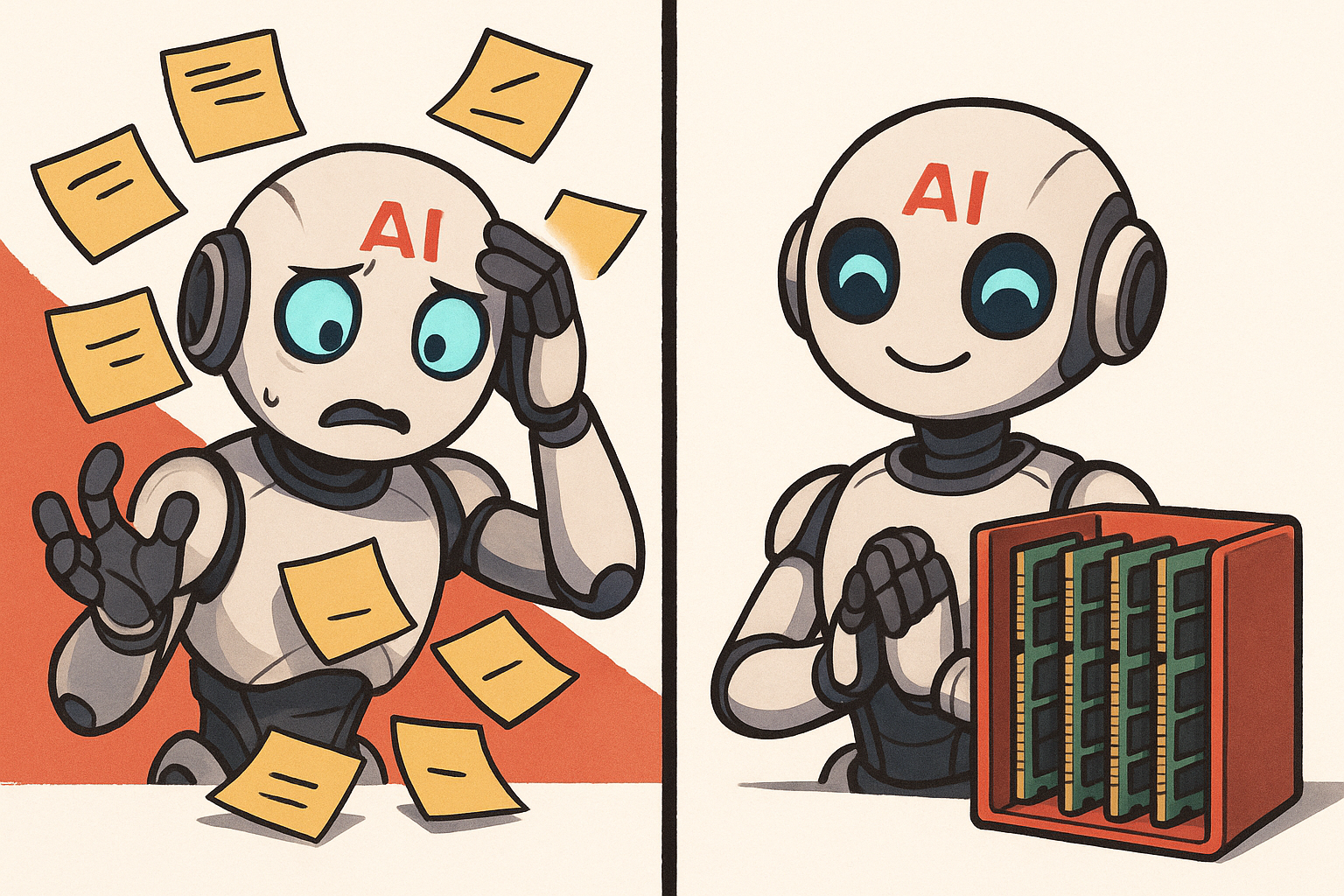

Unlike human brain that efficiently accesses all experiences, AI systems today lack sophisticated memory access management, forcing users to repeatedly provide relevant context. What can we do today to solve this problem and what can we expect in the future?

If we were super intelligent beings but without memory, we would find ourselves having to retrace the logical chain of reasoning from scratch every time and we might not achieve better results than if we were less intelligent but had great memory.

With this comparison, I’d like to encourage reflection on the fact that although today’s focus is entirely on the race toward more intelligent models, the famous AGI, by working on techniques for building and retrieving memory, we could enormously enhance our systems, even with today’s models.

We Have a Memory Problem

I bet all of you have experienced describing a situation, system, or requirement multiple times to the same AI model or to different models. I bet you’ve copied and pasted prompts or parts of them. I bet that many times you’ve summarized your needs without providing an LLM with all the details you had, out of laziness or simple lack of time.

All this happens because today’s AI systems are heterogeneous, extremely fragmented and don’t have sophisticated memory management. Standard protocols for interacting with external memory don’t exist, and often memory is simply represented by textual content included in a prompt, leaving precise and efficient management to the user.

LLMs and Context

There are mainly two techniques to make a model consider data when constructing its responses:

- Training it on that data

- Providing it in the input context

The first is very expensive, not real-time, not feasible on closed-source models and generally effective only for large amounts of data. The second is therefore the popular choice, but it imposes its own trade-offs:

-

Context has limited size. These limits are constantly increasing, but they still exist, and providing lots of data in context adds costs, latency, and potential noise.

-

We can use RAG (Retrieval Augmented Generation), which means retrieving relevant data from potentially unlimited external storage, providing only a subset obtained through a search mechanism (perhaps semantic) in the context. However, this means delegating to this mechanism the discrimination between relevant and irrelevant data, a task that’s far from trivial and today is typically performed poorly.

Our Memory is Different

We can consider an LLM as a collective intelligence trained on publicly accessible data from all humanity (as a first rough approximation), but they know nothing about our private memory. This is why they’re generally better than us at answering general knowledge questions, but to answer our questions, they need context that we must explicitly provide.

The problem is that this latter operation is essentially manual today, with very few supporting tools. Our mind, instead, accesses all our memory in a complex way (I honestly couldn’t describe it), but precise and super efficient. Every past experience contributes, with different weights, to subsequent cognitive processes and does so intrinsically, without anyone having to select which memory is useful and should be retrieved to contribute to a particular thought.

From this perspective, currently available AI systems are enormously deficient on memory management.

The State of the Art

What we can do with today’s available tools is use discipline and method to build and centralize our memory, personal or collective if we’re referring to an organization. So we try to collect context fragments in unified and maximally accessible storage.

There are already some techniques born for this, like the Memory Bank pattern introduced by Cline and then spread to many other coding assistants. It provides that, in interaction with an AI agent, the agent takes care of contributing to a permanent knowledge base with details that we gradually write in our prompts. This way we can then add this knowledge base to the context, using it as permanent memory.

There are also services focusing on memory management. One of the most interesting is mem0 which proposes to offer a “universal, self-improving memory layer for LLM applications.” Interesting is one of their side products, very simple, called OpenMemory. It’s essentially a Google Chrome addon that allows you to historicize, reference, and share prompts across different AI chatbots (ChatGPT, Claude, Gemini, Perplexity, etc.).

Many organizations are also moving to purchase or create their own systems for collecting and storing shared knowledge bases. The topic of semantic search remains very complex, because simple RAG systems exposed with MCP servers show weaknesses in terms of poor precision. The temptation, given the increasing limits of many models, is to bring all data into context, but then it becomes a problem of costs and latency.

What to Expect in the Future?

I’m convinced that in the coming months, major industry players will move with new solutions for memory management. Confirming this, in a recent interview, Sam Altman said “People want memory”. He also said he sees memory as the key for making ChatGPT truly personal. It needs to remember who you are, your preferences, routines and quirks, and adapt accordingly.

This will open up major issues related to security and privacy, because we’re talking about private data that we currently store on storage or cloud services that, at the very least we assume, don’t use it without our explicit consent.

This data is simultaneously the most sensitive but also the most useful for enabling AI to create value for us.