Challenges and Paths of AI-assisted Engineering

Published 2025-09-13 by Mattia • 4 min read

In a recent paper published by researchers from MIT, University of California, Stanford University, and others, I found an analysis of the challenges still open in AI-assisted engineering and potential solutions yet to be explored.

Link to the original paper: Challenges and Paths Towards AI for Software Engineering

First, a brief digression. Until a few years ago, I would never have dreamed of having the time to carefully read a 76-page scientific paper. Today, however, I was able to learn several new things by relying on a simple yet powerful AI-assisted workflow:

- I asked Perplexity to find all papers from the last 6 months on AI-assisted coding

- I selected those that seemed most interesting based on their titles

- I downloaded them, uploaded them to ChatGPT, and used a custom prompt I had prepared to extract the most original and least trivial content from each, summarized in bullet points

- For the elements I found most interesting, I asked for further elaboration, citing the original paper where possible

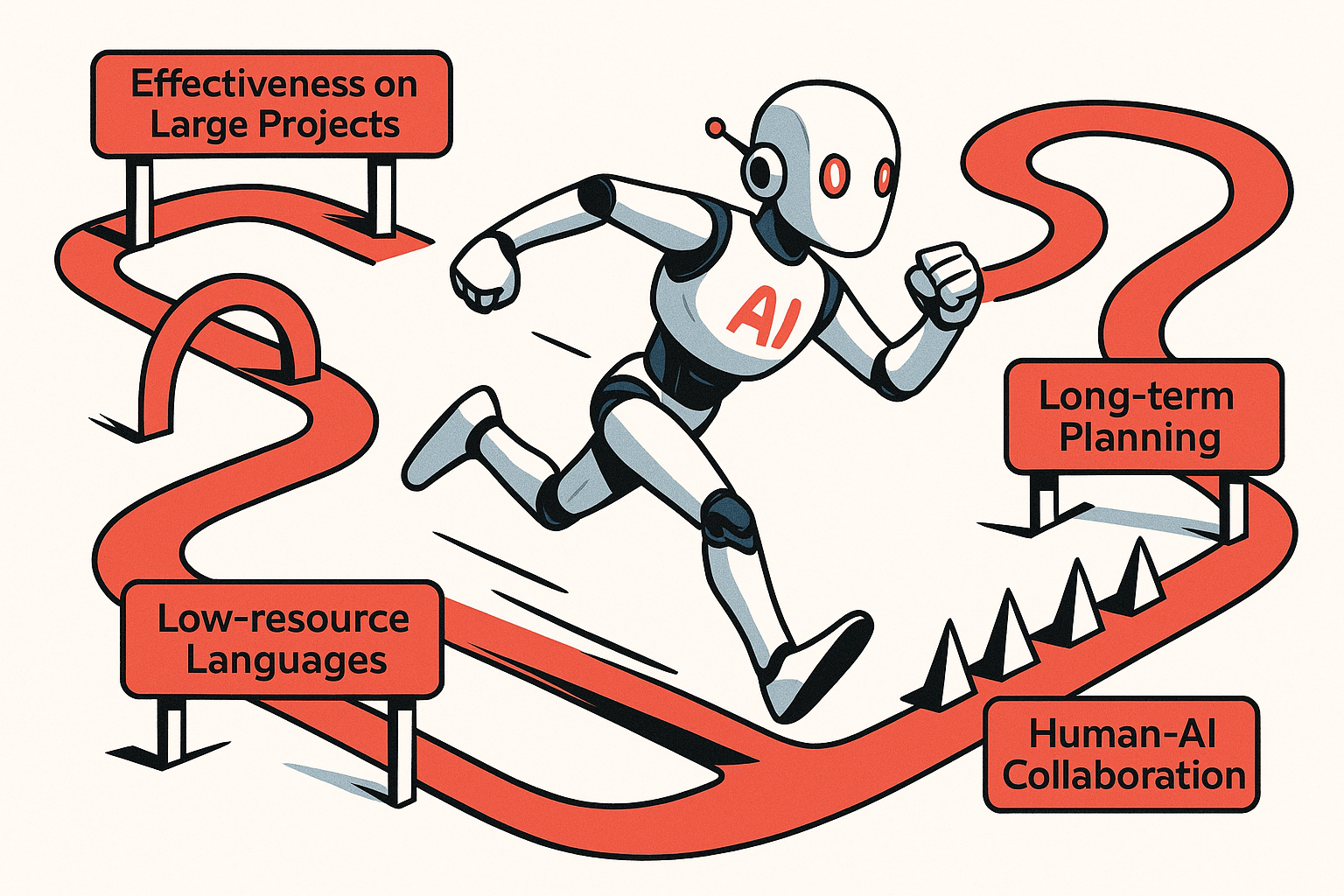

Returning to the paper, below are the main challenges cited that I also consider, from my own experience, as areas still worth exploring.

Challenges

Effectiveness on Large Projects

The limitations on context size (and costs) that models can operate with have negative effects on general effectiveness in large-scale projects. In new projects, modularity helps us limit the context needed by the model, but in complex and poorly decoupled existing systems, this is not possible. Code assistants have built sophisticated indexing and search mechanisms, but there remains significant room for improvement.

Long-term Planning

LLMs tend to duplicate code, fail to respect modularity, and don’t design robust abstractions. In general, they lack long-term planning capabilities on large systems because they have limited contexts and limited ability to identify the most relevant parts of the context.

Low-resource Languages

For underrepresented languages (COBOL, Lean, internal DSLs, etc.), where few examples are available in public training sets, LLMs obviously have very low precision levels.

Library and Framework Updates

Models struggle to keep up with new versions, updated paradigms, and deprecations since they are trained periodically on updated datasets. Using tools capable of retrieving up-to-date documentation and putting it in context can mitigate this problem, but models often continue to propose outdated versions or mix them up.

Human-AI Collaboration

AI doesn’t know how to ask developers for clarification or handle design trade-offs. When faced with incomplete or unclear specifications, models tend to make autonomous inferences instead of explicitly asking users for more details.

Integration with Programming Tools

Many coding assistants have introduced sophisticated IDE integrations, but models are still unable to fully leverage tools like debuggers, profilers, linters, static and dynamic analysis tools, etc.

Evaluation and Benchmarking

Current benchmarks focus on narrow tasks and are contaminated by training data. They don’t measure well a model’s ability to work on real projects with human interaction and the quality of the produced code.

Solutions to Explore

Data Collection

Much of the battle, as always, is fought over the datasets used for model training. Today there are no filters on the quality of code that becomes part of training sets, and much of this comes from open source communities. This means models are trained on low-quality, legacy, poorly maintained codebases, while enterprise codebases, which are generally private, are underrepresented.

Increasing the quality of these training sets would have very positive effects on AI model capabilities.

Training

Reinforcement learning is a hot and recurring topic. RL environments where the model receives rewards for correct, quality, and performant code could improve it significantly. Similarly, it would be important to train models to work in teams, therefore to ask for clarification and interact with users to proactively encourage them to provide more context.

Tool Integration

Working on mechanisms for searching relevant code through semantic embeddings can significantly increase the quality of context we provide to the model and, with it, the accuracy of results. The quality and depth of development tool integration would also allow an agent to iterate autonomously to achieve the desired result.